OPENMUL CONTROLLER

|

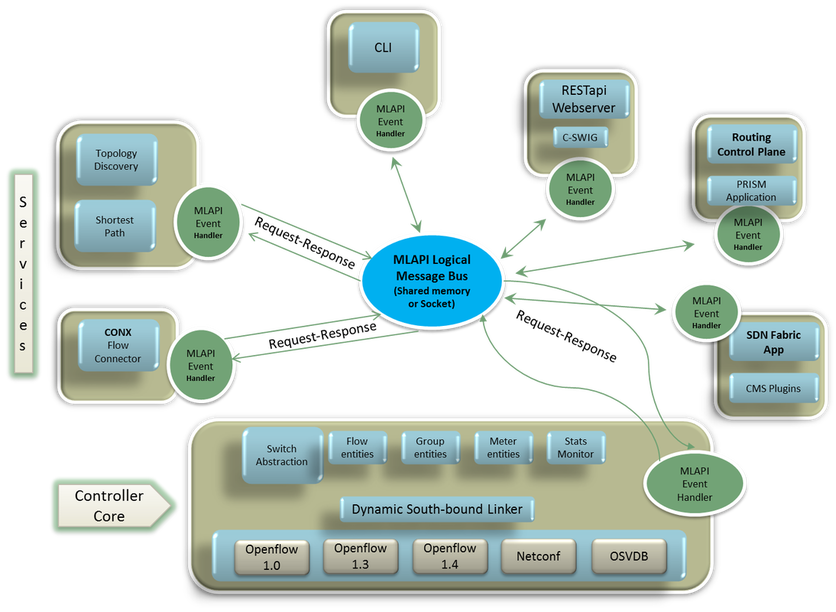

OpenMUL Controller provides a base controller platform for everything SDN/Openflow. It is a lightweight SDN/Openflow controller written almost entirely in C (from scratch) and provides top performance in terms of flow handling (download rate and latency) as well as a very stable application development platform.

Following are the major components of OpenMUL controller:

1) Mul director/core

a. Major component of mul

b. Handles all low level switch connections and does Openflow processing

c. Provides application programming interface in form of MLAPIS (mid-level APIs)

d. Provides Openflow (or any south bound protocol) agnostic access to devices

e. Supports hooks for high speed low-latency learning (reactive) processing infrastructure

f. Makes sure all flows, groups, meters and other switch specific entities are kept in sync across switch, controller reboots/failures.

2) Mul infrastructure services

a. These provide basic infra services built on top of mul director/core

b. Currently available :

i. Topology discovery service

This service uses LLDP packets to discover network switch topology. This also has the ability to detect and prevent

network loops on demand in close interaction with mul-core.

ii. Path finding service

This service uses Flloyd-Warshall (All-pairs shortest path) algorithm to calculate shortest path between two network

nodes. It supports ECMP with the possibility to influence route selection behavior using various external parameters

like link speed, link latency etc. It is designed to be fast and scalable (tested upto 128 nodes). Also, provides an

extremely fast shared memory based API interface to query routes.

iii. Path Connector Service

This service provides a flexible interface for application to install flows across a path. Most of the time, any SDN

application views the SDN island as a single domain and would like to operate on a flow across the island. It becomes

cumbersome for application to maintain various routes, calculate path-routes, take care of link failovers etc. This

service completely hides such complexities from the Application and App developer. Another important feature of this

service is that it separates a SDN domain into core and edge. The application requested flows are only maintained at

edge switches. This keeps flow budget (an expensive resource in Openflow world) at the core switches to bare

minimum.

3) MuL system apps

a. System apps are built using a common api provided by mul-director and mul-services

b. These are hardly aware of Openflow and hence designed to work across different openflow versions provided switches

support common requirement of these apps

c. Currently available :

i. L2switch : A bare-bones app modeled on legacy L2 learning switching logic. Provides a fast way to verify networking

connectivity in a small networking setup

ii. CLI app : This provides a common CLI based provisioning tool for all MuL components.

iii. NBAPI webserver : This provides RESTful Api’s for mul controller. Webserver written in Python

The above explained modules provide robust environment for developing various high level applications like PRISM, SDN fabric or TAP application.

Following are the major components of OpenMUL controller:

1) Mul director/core

a. Major component of mul

b. Handles all low level switch connections and does Openflow processing

c. Provides application programming interface in form of MLAPIS (mid-level APIs)

d. Provides Openflow (or any south bound protocol) agnostic access to devices

e. Supports hooks for high speed low-latency learning (reactive) processing infrastructure

f. Makes sure all flows, groups, meters and other switch specific entities are kept in sync across switch, controller reboots/failures.

2) Mul infrastructure services

a. These provide basic infra services built on top of mul director/core

b. Currently available :

i. Topology discovery service

This service uses LLDP packets to discover network switch topology. This also has the ability to detect and prevent

network loops on demand in close interaction with mul-core.

ii. Path finding service

This service uses Flloyd-Warshall (All-pairs shortest path) algorithm to calculate shortest path between two network

nodes. It supports ECMP with the possibility to influence route selection behavior using various external parameters

like link speed, link latency etc. It is designed to be fast and scalable (tested upto 128 nodes). Also, provides an

extremely fast shared memory based API interface to query routes.

iii. Path Connector Service

This service provides a flexible interface for application to install flows across a path. Most of the time, any SDN

application views the SDN island as a single domain and would like to operate on a flow across the island. It becomes

cumbersome for application to maintain various routes, calculate path-routes, take care of link failovers etc. This

service completely hides such complexities from the Application and App developer. Another important feature of this

service is that it separates a SDN domain into core and edge. The application requested flows are only maintained at

edge switches. This keeps flow budget (an expensive resource in Openflow world) at the core switches to bare

minimum.

3) MuL system apps

a. System apps are built using a common api provided by mul-director and mul-services

b. These are hardly aware of Openflow and hence designed to work across different openflow versions provided switches

support common requirement of these apps

c. Currently available :

i. L2switch : A bare-bones app modeled on legacy L2 learning switching logic. Provides a fast way to verify networking

connectivity in a small networking setup

ii. CLI app : This provides a common CLI based provisioning tool for all MuL components.

iii. NBAPI webserver : This provides RESTful Api’s for mul controller. Webserver written in Python

The above explained modules provide robust environment for developing various high level applications like PRISM, SDN fabric or TAP application.